OCTOBER 16, 2018 FEATURE

A new approach to infuse spatial notions into robotics systems

a) 1% of the 2500 exploratory arm configurations mi . b) Two 3D projections of 1% of the sets Mi embedded in the 4D motor space. c) Schematic of the projected manifold and capturing of external parameters. d) Projection in 3D of the 2500 manifolds Mi (gray points) with surfaces corresponding to translations in the working space for different retinal orientations. Credit: Laflaquière et al.

Researchers at Sorbonne Universités and CNRS have recently investigated the prerequisites for the emergence of simplified spatial notions in robotic systems, based on on a robot’s sensorimotor flow. Their study, pre-published on arXiv, is a part of a larger project, in which they explored how fundamental perceptual notions (e.g. body, space, object, color, etc.) could be instilled in biological or artificial systems.

So far, the designs of robotic systems have mainly reflected the way in which human beings perceive the world. Designing robots guided solely by human intuition, however, could limit their perceptions to those experienced by humans.

To design fully autonomous robots, researchers might thus need to step away from human-related constructs, allowing robotic agents to develop their own way of perceiving the world. According to the team of researchers at Sorbonne Universités and CNRS, a robot should gradually develop its own perceptual notions exclusively by analyzing its sensorimotor experiences and identifying meaningful patterns.

January 2018, On the “feel” of things. The sensorimotor theory of consciousness (An interview with Kevin O’Regan)Kevin O'Regan explains the sensorimotor theory of consciousness in his interview with Cordelia Erickson-Davis. Source : O’Regan, K. & Erickson-Davis, C. (2018). On the “feel” of things: the sensorimotor theory of consciousness. An interview with Kevin O’Regan. ALIUS Bulletin, 2, 87-94.

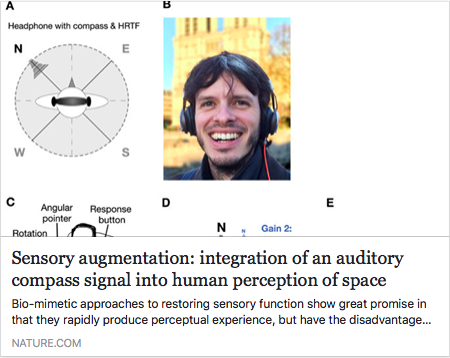

February 2017, A magnetic sixth sense: The hearSpace smartphone app transforms human experience of space A novel sensory augmentation device, the hearSpace app, allows users to reliably hear the direction of magnetic North as a stable sound object in external space on a headphone.

A novel sensory augmentation device, the hearSpace app, allows users to reliably hear the direction of magnetic North as a stable sound object in external space on a headphone.

For this, Schumann & O'Regan developed a new approach to sensory augmentation that piggy-backs the directional information of a geomagnetic compass on ecological sensory cues of distal sounds, a principle they termed contingency-mimetic sensory augmentation. In what is potentially a break-through advantage, contingency-mimetics allows the magnetic augmentation signal to integrate into the existing spatial processes via natural mechanisms of auditory localisation.

Despite many suggestions in the literature that sensory substitution and augmentation may never become truly perceptual, their results, now published in the journal Scientific Reports, show that short training with this magnetic-auditory augmentation signal leads to long-lasting recalibration of the vestibular perception of space, either enlarging or compressing how space is perceived.

Source: Schumann, F., & O’Regan, J. K. (2017). Sensory augmentation: integration of an auditory compass signal into human perception of space. Scientific Reports, 7, 42197. http://www.nature.com/articles/srep42197

May 2016, EU Research, journal with extensive experience working with EU funded projects, wrote an article about FEEL

The FEEL project are developing a new approach to the ‘hard’ problem of consciousness, pursuing theoretical and empirical research based on sensorimotor theory. We spoke to the project’s Principal Investigator J. Kevin O’Regan about their work in developing a fullyfledged theory of ‘feel’, and about the wider impact of their research

November 2015, Kevin O'Regan's talk on how a naive agent can discover space by studying sensorimotor contingencies. Presented at BICA 2015Kevin O'Regan from Alexei Samsonovich on Vimeo.

June 2015 French Tv programme (E=M6) broadcasted Kevin O'Regan's explaination on magic and illusion in the first part of the video and in the last part there is Christoph Witzel and Carlijn Van Alphen’s experiment on the dress illusionMay 2015, Article by Laura Spinney about our work on infant development

GIVE a 14-month-old baby a rake and show it a toy just out of its reach, and it will do one of a number of things. It might wave the rake about without making contact with the toy, for example, or it might drop the rake and point at the toy. What it won’t do is use the rake to bring the toy within its grasp. Not until around 18 months of age does a baby realise that the rake can function as a tool in this way—and then it does so quite suddenly.

At Paris Descartes University in France, developmental psychologist Jacqueline Fagard is interested in what it is that changes in the baby’s brain at that age, that allows it to learn that new behaviour. Another person who is interested in the answer to that question is experimental psychologist Kevin O’Regan, who works at the same university.

March 2015, Laura Spinney's article about Color workpackage of Feel project

WHERE does the feeling of redness come from? You might think it is generated inside your brain when it detects light of a certain wavelength. That’s the conventional and in some ways the instinctive view. But colour scientists have known for a long time that this is wrong.

March 2015: Here is our explanation of why the famous photo of "The Dress" looks utterly different to different observers.

February 2015, Laura Spinney's article about Math related workpackage of Feel project

TRY this: close your eyes and touch your nose with your index finger. You know that you are touching yourself, but how do you know it?

December 2014, Laura Spinney's article about the "Philosophy" workpackage of Feel project

What’s missing is an account of the phenomenal experience of perception—what it feels like. With its emphasis on our active engagement with the world, the sensorimotor theory aims to fill that gap by providing a language with which we can articulate what our experiences are like.

October 2014, Laura Spinney, science journalist, wrote several pieces about the project. The first one is an introduction that gives an interesting overview of the sensorimotor theory.IT’S A DILEMMA beloved of barstool philosophers everywhere: imagine that, when you and I both look at a yellow flower such as a marigold, you see blue and I see yellow. If we both describe the marigold as yellow, and if we have both grown up making yellow-type associations whenever we saw marigolds, would there be any way of telling that our perceptual experiences of the flower’s colour were fundamentally different?

September 2014, Aldebaran Robotics have put on youtube their first "A-talk" (imitating TED talks) in which Kevin O'Regan talks about the sensorimotor theory and claims that robots will soon be conscious.

February 2013, Workshop on Conceptual and Mathematical Foundations of Embodied Intelligence, Max Planck Institute for Mathematics in Sciences, Leipzig Germany

A theoretical basis for how artificial or biological agents can construct the basic notion of space

(joint work with Alban Laflaquière, Alexander Terekhov)

Mai 2012, “Je tâte donc je suis”, Podcast Recherche en Courshttp://www.rechercheencours.fr/REC/Podcast/Entrees/2012/5/25_Je_tate_donc_je_suis.html

March 2012, Interview with Paul Verschure, Convergent Science Network Podcasthttp://csnetwork.eu/podcast/?p=episode&name=2012-03-07_interview_kevin_oregan.mp3